The Biggest Barrier to AI Success Isn’t Technical. It’s Linguistic.

Most confusion around artificial intelligence right now isn’t actually technical. It’s linguistic.

If you watch what happens in a typical business conversation regarding this technology, you will see a familiar pattern emerge. Someone starts by saying, “We’re rolling out AI.” Another person immediately asks, “Is that an agent or just ChatGPT?” A third person chimes in with, “We need RAG for compliance.”

In the rush to execute, nobody slows down to check whether they are even talking about the same thing. Usually, they are not.

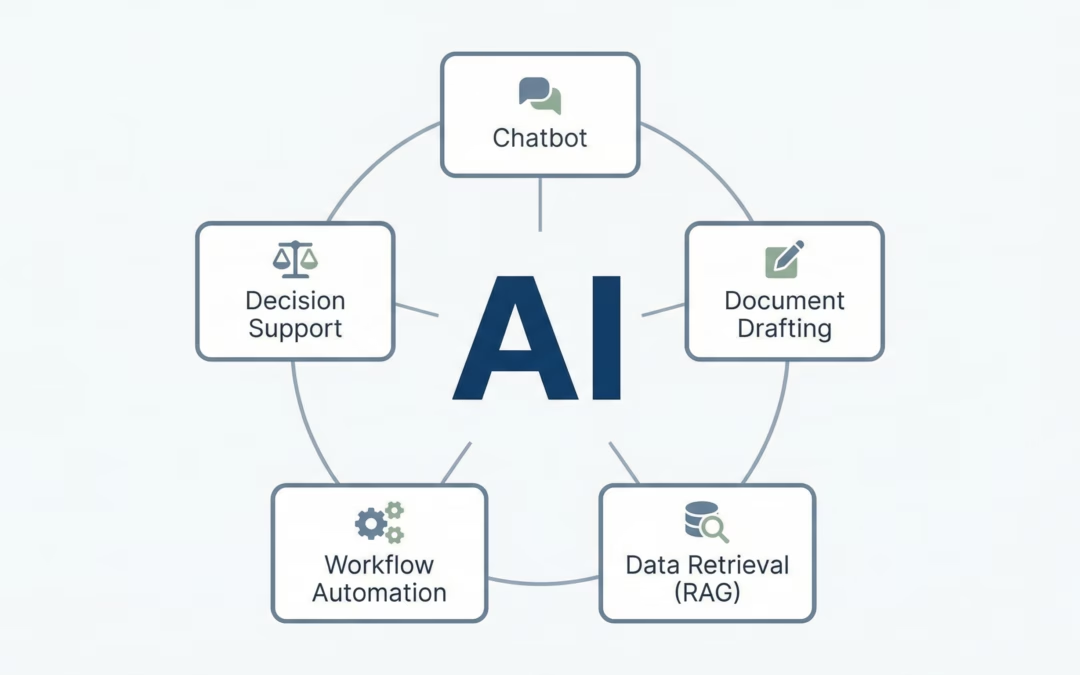

To one person in that room, “AI” might mean a basic chatbot answering FAQs. To another, it is an automated workflow that drafts complex documents. To a third, it is a secure model querying internal databases, and to a fourth, it is an autonomous system taking actions without human intervention.

They are using the same word, but they are discussing four vastly different scenarios with different risks, different costs, and different control requirements.

This is exactly why so many AI projects stall or quietly fail. It is not because the technology is magical or too difficult. It is not because staff are resistant to change. It is because words are doing too much work without shared definitions.

When you strip the jargon back to one clean sentence per term, something interesting happens. AI stops sounding mystical. It starts behaving like what it actually is: a system that produces plausible outputs, not guaranteed truths. It is useful, but fallible. It is fast, but context-blind without help.

Once you realize this, the real design questions surface. Where does the data come from? Who signs off on the output? What happens when the model is wrong? Which parts of this process should never be automated?

Clarity must come before capability. If teams cannot define what they are deploying, they cannot govern it, trust it, or scale it. True AI literacy is not about learning how to code or use new tools; it is about agreeing on what words mean before they make decisions for you.

Most organizations do this backwards, and then they wonder why things feel fuzzy.

To help sharpen your thinking and your next meeting, here is a breakdown of the core terminology defined simply.

CORE MODELS AND CONCEPTS

AI (Artificial Intelligence)

A broad term for machines performing tasks that normally require human intelligence, such as reasoning, language, or perception.

ML (Machine Learning)

A subset of AI where systems learn patterns from data rather than being explicitly programmed.

DL (Deep Learning)

A type of machine learning that uses multi-layer neural networks to model complex patterns, especially in language and vision.

LLM (Large Language Model)

A neural network trained on massive text datasets to predict and generate human-like language.

Foundation Model

A large, general-purpose AI model trained once and adapted for many different tasks.

Generative AI

AI systems that create new content, such as text, images, audio, or code, rather than just analyzing data.

DATA, KNOWLEDGE, AND CONTEXT

RAG (Retrieval-Augmented Generation)

A method where an AI retrieves relevant documents from an external source and uses them to ground its response before generating text.

Embeddings

Numeric representations of text, images, or data that allow AI to measure similarity and meaning.

Vector Database

A database designed to store embeddings and quickly retrieve the most semantically similar items.

Context Window

The amount of information an AI model can consider at one time when generating a response.

Knowledge Cutoff

The point in time after which a model has no built-in knowledge unless connected to live data.

Grounding

Anchoring AI responses to verified data sources to reduce hallucinations.

PROMPTING AND INTERACTION

Prompt Engineering

The practice of structuring inputs to guide AI systems toward more accurate or useful outputs.

Zero-Shot Learning

An AI performing a task without having seen explicit examples beforehand.

Few-Shot Learning

Improving AI performance by providing a small number of examples within the prompt.

System Prompt

Hidden instructions that define how an AI should behave, reason, or respond.

Temperature

A setting that controls how deterministic or creative an AI’s outputs are.

AUTOMATION AND AGENTS

AI Agent

An AI system that can plan, take actions, and use tools autonomously to achieve goals.

Agentic Workflow

A process where AI agents execute multi-step tasks with minimal human intervention.

Human-in-the-Loop (HITL)

A design approach where humans review, approve, or intervene in AI outputs.

Orchestration

The coordination of multiple AI models, tools, and workflows into a single system.

Tool Calling

An AI’s ability to invoke external APIs, software, or actions during execution.

BUSINESS AND DEPLOYMENT

Inference

The act of running a trained AI model to generate outputs from new inputs.

Fine-Tuning

Training a pre-built model further on specific data to adapt it to a narrower use case.

API (Application Programming Interface)

A structured way for software systems to communicate with AI models.

Latency

The delay between submitting a request to an AI system and receiving a response.

Token

A unit of text (roughly a word or part of a word) used to measure input and output size.

RISK, SAFETY, AND GOVERNANCE

Hallucination

When an AI confidently produces information that is false or unsupported.

Model Drift

Performance degradation over time as real-world data changes.

Bias

Systematic errors in AI outputs caused by imbalanced or flawed training data.

Explainability

The ability to understand why an AI produced a particular result.

Guardrails

Constraints and controls designed to keep AI behavior safe, compliant, and predictable.

INFRASTRUCTURE AND ACCESS

Cloud AI

AI models hosted and accessed via cloud platforms rather than running locally.

Edge AI

AI processing performed directly on devices rather than in the cloud.

Access Layer

A system that simplifies how users interact with AI, abstracting prompts, complexity, and risk.

Stateless Processing

AI interactions where no conversation history or data is retained after execution.

CULTURAL AND STRATEGIC TERMS

AI Literacy

A person’s ability to understand what AI can and cannot do.

AI Maturity

How advanced and embedded AI usage is within an organization.

Shadow AI

Unapproved or unmanaged AI use by employees outside official systems.

Democratized AI

Making AI tools accessible to non-technical users, not just engineers.

THE BOTTOM LINE

Most confusion around AI is not technical. It comes from words being used without shared definitions.

Once you strip these terms down to one clean sentence, AI stops sounding mystical and starts behaving like what it actually is: a powerful, fallible, probabilistic tool that needs context, boundaries, and human judgment.

This glossary is a starting point, not a belief system. Save it for the next time “AI” means five different things in one meeting.